Restrict OpenAI Models and deployment type with Azure Policy

TL;DR

When deploying Azure OpenAI models in AI Foundry Portal you might enable processing your data outside of the data residency region. Choose Standard over GlobalStandard, and only use models that support Standard in your region. This means currently only gpt-4-1106-preview in Norway East.

The post featured image was generated by Claude (free version, if you can’t tell) and is supposed to show a police officer restraining an anthropromorphized Azure policies icon. Not the best image, but I think it fits!

Background

I have been working with Azure OpenAI lately deploying AI Foundry Hubs and AI Foundry Projects with service connections to both OpenAI services and Document Intelligence (FormRecognizer). After some testing and proof-of-contepts I have found some important distinctions regarding deployment types that may or may not be relevant to your specific use case.

Data processing disclaimer

Please note that there is nothing inherently sinister, wrong, or problematic by processing data outside of your region! This is done for some services, and is often for capacity or resiliency purposes.

Azure AI Foundry Portal

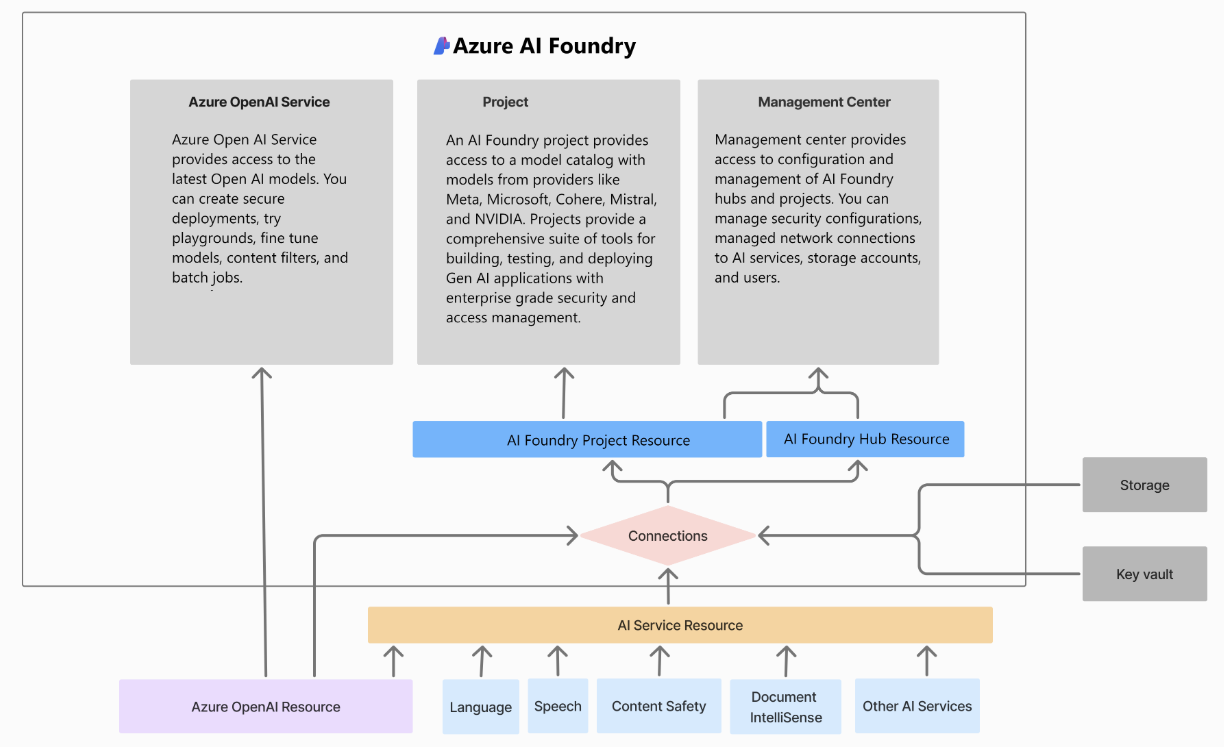

Azure AI Studio was recently rebranded to Azure Foundry Portal. Azure Foundry Portal is the main portal where you can interact with AI services in Azure. You have the possibility of managing Foundry Hubs, Foundry Projects, and relevant AI services. Also you can use playground to manage and deploy language models.

I will not go into detail what all of this is, as I have a specific use case in mind with this post. Maybe I will write follow up posts for deployment of the services with Terraform.

Management center

The management center streamlines governance and management of AI Foundry resources such as hubs, projects, connected resources, and deployments.

Azure AI Foundry Hubs

The AI Foundry hub serves as the primary organizational unit within the AI Foundry portal, built on Azure Machine Learning technology. In Azure terms, it’s identified as a Microsoft.MachineLearningServices/workspaces resource with the Hub resource type.

It provides a managed network for security purposes, access to compute resources, centralized management of Azure service connections, project organization and a dedicated storage account for metadata, artifacts, and other uploads.

Azure AI Foundry Projects

An AI Foundry project is a child resource within a hub, utilizing the Microsoft.MachineLearningServices/workspaces Azure resource provider and designated as a Project resource type.

It provides a development environment for AI applications, a collection of reusable components, project-specific isolated storage container for data, project-specific service connections, and deployment capabilities for OpenAI endpoints and fine-tuning.

The following image visualizes how these resources relate to each other

Azure Policy for AI

There are already many available policy definitions builtin, and there are some initiatives from ALZ (Enforce-Guardrails-Cognitive- and Enforce-Guardrails-OpenAI).

More great initiatives can be found in the Enterprise-Scale wiki.

These initiatives are a great starting point, and will give you some form of control over your AI deployments with regards to managed identities, local authentication, and most importantly network access.

Deployment type

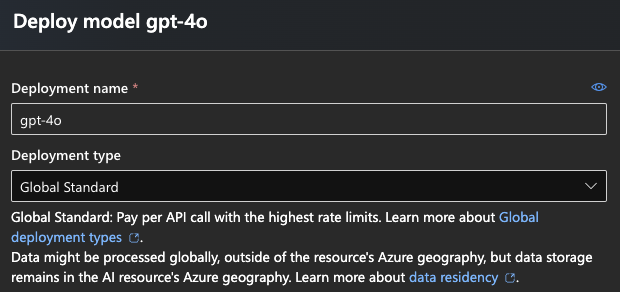

When deploying OpenAI models in your AI services, there is something called “Deployment type”. I did not pay much attention to this when first deploying the gpt-4o and gpt-4o-mini for testing purposes, and chose “GlobalStandard” because this was the only way of getting them in Norway East. Fine and dandy for testing data and just playing around with the GUI.

After the initial deployment the name “GlobalStandard” gave me some questions.

- What does it mean?

- What exactly is global about the model?

- Why is this only available as GlobalStandard and not Standard?

Turns out there is a simple explanation to the why: GPU availability. There simply aren’t enough GPU resources available in Norway East to serve all of the capacity requests for all the models. Not even West Europe or other large regions have access to all the models as Standard deployment type. One of the few that has this is Sweden Central, which luckily is in our vicinity.

You can see a nice table of the availability for both Standard and GlobalStandard.

With regards to what it means: well, it turns out your data might be processed outside of your region when choosing GlobalStandard. This might be fine for your data, but if you are using health, finance, or any other type of sensitive data, this needs some extra consideration.

GlobalStandard basically means that there might be capacity limitations in the chosen datacenter which leads processing to happen outside of your data residency region. No data is stored outside your region, aside from of course the Abuse and Content Filter review process.

Restrict deployment type with Azure Policy

When given access to an OpenAI service and the appropriate permissions on the AI Foundry Hub, a developer can deploy models in whichever way they fancy. Deployment type or region is not restricted by default. Although region will be restricted if you have policies for this in place, which I sincerely hope.

After looking around I could not find a policy definition to restrict deployment type. Therefore I made one myself.

This policy will deny any deployment that does not match one of the provided model names (provided in parameters) and deployment type of “Standard”. You can assign this policy on any scope, but resource group or subscription would maybe be a suitable starting point. If adding this to a brownfield deployment you might want to use the audit effect, and change to deny after testing.

{

"displayName": "Custom AI models restrict models and deployment types",

"policyType": "Custom",

"name": "##GENERATE A RANDOM GUID WITH POWERSHELL NEW-GUID##",

"mode": "All",

"description": "Restricts deployment type other than Standard and models other than those we explicitly allow.",

"metadata": {

"category": "AI Governance"

},

"parameters": {

"effect": {

"type": "String",

"metadata": {

"displayName": "Effect",

"description": "Effect for the policy assigned."

},

"allowedValues": ["Audit", "Deny"],

"defaultValue": "Deny"

},

"allowedModels": {

"type": "Array",

"metadata": {

"displayName": "Allowed AI models",

"description": "The list of allowed models to be deployed."

},

"defaultValue": ["gpt-4-1106-preview", "gpt-4o-2024-11-20", "gpt-4o-mini"]

}

},

"policyRule": {

"if": {

"allOf": [

{

"field": "type",

"equals": "Microsoft.CognitiveServices/accounts/deployments"

},

{

"field": "Microsoft.CognitiveServices/accounts/deployments/sku.name",

"notEquals": "Standard"

},

{

"not": {

"value": "[concat(field('Microsoft.CognitiveServices/accounts/deployments/model.name'), ',', field('Microsoft.CognitiveServices/accounts/deployments/model.version'))]",

"in": "[parameters('allowedModels')]"

}

}

]

},

"then": {

"effect": "[parameters('effect')]"

}

}

}

This is a fairly basic policy definition, and should give you some kind of understanding of how the deployment is denied. This could easily be made more dynamic and flexible with more parameters, but I don’t need more flexibility at the moment. It serves as an adequate addition for specific governance of my AI deployments. Place this json file in a folder inside your Terraform code, if you are deploying with Terraform like described below. I have opted to put it in a folder called lib/policy_definitions/policy.json in the example below.

It should maybe be noted that you won’t get a nice “deployment failed because of policy”, but actually you get a “identity x does not have write permissions on resource y”. Not sure why this happens, but might be a bug or something that will be fixed further down the road. Either way this prevents deployment of GlobalStandard type models.

If you want to expand on this policy definition, and need to find aliases to use, you can find them in the great AzAdvertizer under AzAliasAdvertizer.

Basic deployment of policy with Terraform

Hers’s an example of how you could create the policy definition and assign it on your subscription. If you have followed my previous post about delegated RBAC you will also have to add a role assignment like I have done below. If your SP has owner permissions already you don’t need that.

locals {

restrict_ai_models = jsondecode(file("./lib/policy_definitions/policy.json"))

}

data "azurerm_client_config" "current" {}

data "azurerm_subscription" "current" {}

resource "azurerm_role_assignment" "policy_contributor" {

principal_id = data.azurerm_client_config.current.object_id

scope = data.azurerm_subscription.current.id

role_definition_name = "Resource Policy Contributor"

}

resource "time_sleep" "wait_for_role" {

create_duration = "30s"

depends_on = [azurerm_role_assignment.policy_contributor]

}

resource "azurerm_policy_definition" "restrict_ai_models" {

display_name = local.restrict_ai_models.displayName

mode = local.restrict_ai_models.mode

name = local.restrict_ai_models.name

policy_type = local.restrict_ai_models.policyType

description = local.restrict_ai_models.description

metadata = jsonencode(local.restrict_ai_models.metadata)

parameters = jsonencode(local.restrict_ai_models.parameters)

policy_rule = jsonencode(local.restrict_ai_models.policyRule)

depends_on = [time_sleep.wait_for_role]

}

resource "time_sleep" "wait_for_policy_definition" {

create_duration = "30s"

depends_on = [azurerm_policy_definition.restrict_ai_models]

}

resource "azurerm_subscription_policy_assignment" "assign_restrict_ai_models" {

name = "Enforce OpenAI deployment type"

description = "Enforces Standard deployment type and allows only models which are available and approved."

policy_definition_id = azurerm_policy_definition.restrict_ai_models.id

subscription_id = data.azurerm_subscription.current.id

depends_on = [time_sleep.wait_for_policy_definition]

}

I have only done rudimentary testing of this policy and it goes without saying that I am not providing this as a production ready policy definition for you to immediately assign on your production resources. Use at your own risk and only after testing & verifying in your own lab/test environment.

In summary

Use Azure Policy to take control over your Azure environment. Do not allow the resources, properties, or resource locations that you have not centrally vetted and approved of (of course based on company policy, not whims).

You could potentially have a GDPR breach on your hands, I assume, if data not suited for global processing is actually processed globally by using a GlobalStandard deployment type.

As always, please comment and give me a heads up if I got something wrong or misunderstood anything! That’s the best way to learn.